The first year of President Trump’s second term has seen an aggressive rollback of federal environmental protections, which advocacy groups fear will bring more pollution, higher health risks, and less information and power for Texas communities, especially in heavily industrial and urban areas.

Within Trump’s first 100 days in office, his new Environmental Protection Agency administrator, Lee Zeldin, announced a sweeping slate of 31 deregulatory actions. The list, which Zeldin called the agency’s “greatest day of deregulation,” targeted everything from soot standards and power plant pollution rules to the Endangerment Finding, the legal and scientific foundation that obligates the EPA to regulate climate-changing pollution under the Clean Air Act.

Since then, the agency froze research grants, shrank its workforce, and removed some references to climate change and environmental justice from its website — moves that environmental advocates say send a clear signal: the EPA’s new direction will come at the expense of public health.

Cyrus Reed, conservation director of the Lone Star Chapter of the Sierra Club, said Texas is one of the states that feels EPA policy changes directly because the state has shown little interest in stepping up its environmental enforcement as the federal government scales back.

“If we were a state that was open to doing our own regulations there’d be less impact from these rollbacks,” Reed said. “But we’re not.”

“Now we have an EPA that isn’t interested in enforcing its own rules,” he added.

Richard Richter, a spokesperson at the state’s environmental agency, Texas Commission on Environmental Quality, said in a statement that the agency takes protecting public health and natural resources seriously and acts consistently and quickly to enforce federal and state environmental laws when they’re violated.

Methane rules put on pause

A major EPA move centers on methane, a potent greenhouse gas that traps heat far more efficiently than carbon dioxide over the short term. It accounts for roughly 16% of global greenhouse gas emissions and is a major driver of climate change. In the U.S., the largest source of methane emissions is the energy sector, especially in Texas, the nation’s top oil and gas producer.

In 2024, the Biden administration finalized long-anticipated rules requiring oil and gas operators to sharply reduce methane emissions from wells, pipelines, and storage facilities. The rule, developed with industry input, targeted leaks, equipment failures, and routine flaring, the burning off of excess natural gas at the wellhead.

Under the rule, operators would have been required to monitor emissions, inspect sites with gas-imaging cameras for leaks, and phase out routine flaring. States are required to come up with a plan to implement the rule, but Texas has yet to do so. Under Trump’s EPA, that deadline has been extended until January 2027 — an 18-month postponement.

Texas doesn’t have a rule to capture escaping methane emissions from energy infrastructure. Richter, the TCEQ spokesperson, said the agency continues to work toward developing the state plan.

Adrian Shelley, Texas director of the watchdog group Public Citizen, said the rule represented a rare moment of alignment between environmentalists and major oil and gas producers.

“I think the fossil fuel industry generally understood that this was the direction the planet and their industry was moving,” he said. Shelley said uniform EPA rules provided regulatory certainty for changes operators saw as inevitable.

Reed, the Sierra Club conservation director, said the delay of methane rules means Texas still has no plan to reduce emissions, while neighboring New Mexico already has imposed its own state methane emission rules that require the industry to detect and repair methane leaks and ban routine venting and flaring.

These regulations have cut methane emissions in the New Mexico portion of the Permian Basin — the oil-rich area that covers West Texas and southeast New Mexico — to half that of Texas, according to a recent data analysis by the Environmental Defense Fund. That’s despite New Mexico doubling production since 2020.

A retreat from soot standards

Fine particulate matter or PM 2.5, one of six pollutants regulated under the Clean Air Act, has been called by researchers the deadliest form of air pollution.

In 2024, the EPA under President Biden strengthened air rules for particulate matter by lowering the annual limit from 12 to 9 micrograms per cubic meter. It was the first update since 2012 and one of the most ambitious pieces of Biden’s environmental agenda, driven by mounting evidence that particulate pollution is linked to premature death, heart disease, asthma, and other respiratory illnesses.

After the rule was issued, 24 Republican-led states, including Kentucky and West Virginia, sued to revert to the weaker standard. Texas filed a separate suit asking to block the rule’s recent expansion.

State agencies are responsible for enforcing the federal standards. The TCEQ is charged with creating a list of counties that exceed the federal standard and submitting those recommendations to Gov. Greg Abbott, who then finalizes the designations and submits them to the EPA.

Under the 9 microgram standard, parts of Texas, including Dallas, Harris (which includes Houston), Tarrant (Fort Worth), and Bowie (Texarkana) counties, were in the process of being designated nonattainment areas — which, when finalized, would trigger a legal requirement for the state to develop a plan to clean up the air.

That process stalled after Trump returned to office. Gov. Greg Abbott submitted his designations to EPA last February, but EPA has not yet acted on his designations, according to Richter, the TCEQ spokesperson.

In a court filing last year, the Trump EPA asked a federal appeals court to vacate the stricter standard, bypassing the traditional notice and comment administrative process.

For now, the rule technically remains in effect, but environmental advocates say the EPA’s retreat undermines enforcement of the rule and signals to polluters that it may be short-lived.

Shelley, with Public Citizen, believes the PM2.5 rule would have delivered the greatest health benefit of any EPA regulation affecting Texas, particularly through reductions in diesel pollution from trucks.

“I still hold out hope that it will come back,” he said.

Unraveling the climate framework

Beyond individual pollutants, the Trump EPA has moved to dismantle the federal architecture for addressing climate change.

Among the proposals is eliminating the Greenhouse Gas Reporting Program, which requires power plants, refineries, and oil and gas suppliers to report annual emissions. The proposal has drawn opposition from both environmental groups and industry, which relies on the data for planning and compliance.

Colin Leyden, Texas state director and energy lead at the nonprofit Environmental Defense Fund, said eliminating the program could hurt Texas industry. If methane emissions are no longer reported, then buyers and investors of natural gas, for example, won’t have an official way to measure how much methane pollution is associated with that gas, according to Leyden. That makes it harder to judge how “clean” or “climate-friendly” the product is, which international buyers are increasingly demanding.

“This isn’t just bad for the planet,” he said. “It makes the Texas industry less competitive.”

The administration also proposed last year rescinding the Endangerment Finding, issued in 2009, which obligates the EPA to regulate climate pollution. Most recently, the EPA said it will stop calculating how much money is saved in health care costs as a result of air pollution regulations that curb particulate matter 2.5 and ozone, a component of smog. Both can cause respiratory and health problems.

Leyden said tallying up the dollar value of lives saved when evaluating pollution rules is a foundational principle of the EPA since its creation.

“That really erodes the basic idea that (the EPA) protects health and safety and the environment,” he said.

___

This story was originally published by The Texas Tribune and distributed through a partnership with The Associated Press.

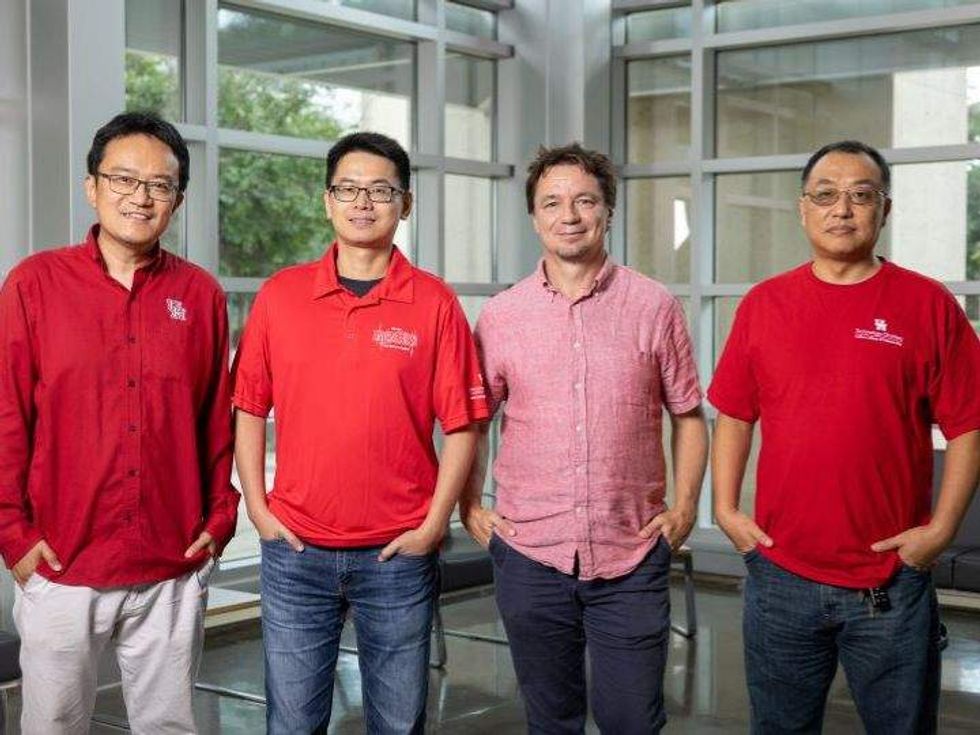

The University of Houston team: Xiaonan Shan, associate professor electrical and computing engineering, Jiefu Chen, associate professor of electrical and computer engineering, Lars Grabow, professor of chemical and biomolecular engineering, and Xuquing Wu, associate professor of information science technology. Photo via UH.edu

The University of Houston team: Xiaonan Shan, associate professor electrical and computing engineering, Jiefu Chen, associate professor of electrical and computer engineering, Lars Grabow, professor of chemical and biomolecular engineering, and Xuquing Wu, associate professor of information science technology. Photo via UH.edu